When is a task time supposed to be longer, rather than shorter, for an optimal user experience? The answer to this question comes down to what user activities we define as “tasks”. The article proposes a way of categorizing user activities that enables us to define if time-spent in an activity should be optimally shorter or longer.

tl;dr

- Whether product teams should aim to lower the time-spent in a user activity depends on how the activity is categorized.

- Completion-oriented: a user only wants to have the activity done for an extrinsic goal. This activity should always have a lower time spent to increase satisfaction.

- Emotion-oriented: a user wants to do the activity because it’s intrinsically satisfying. This goal should have a longer time spent (to a point) to increase satisfaction.

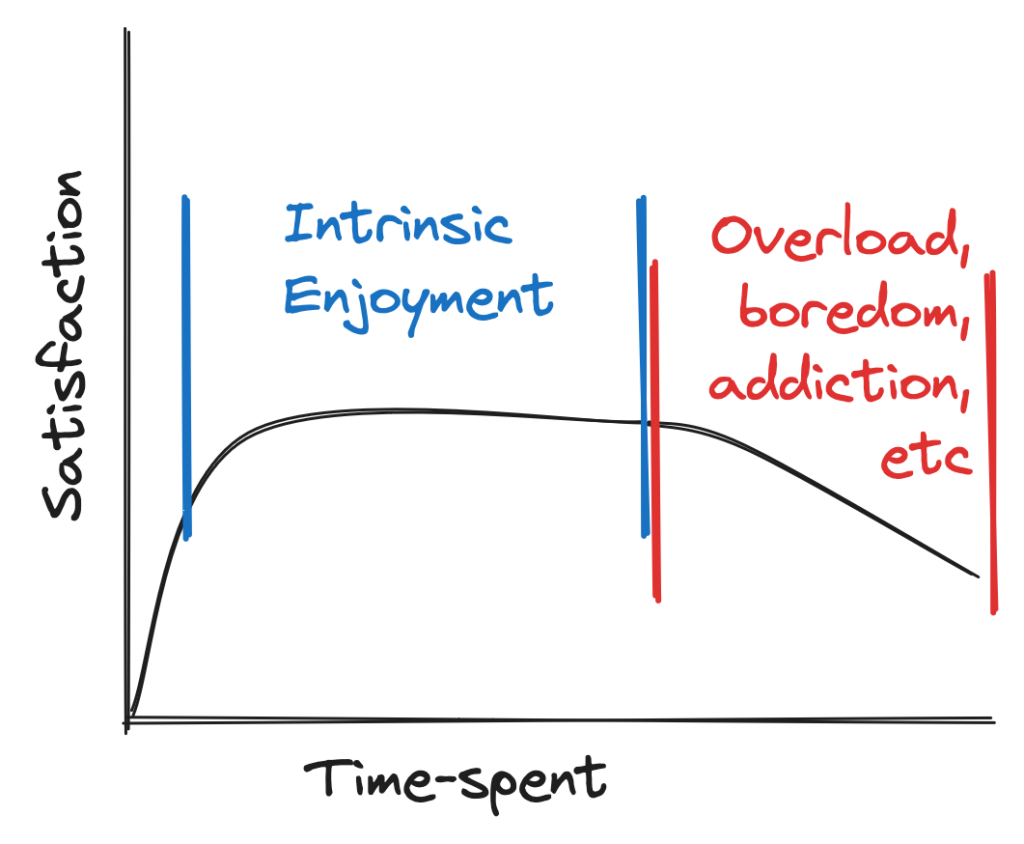

- Emotion-oriented activities have a diminishing return on how much time-spent increases satisfaction due to things like burnout, boredom, addiction, etc.

- Users often switch between activity types fluidly, and a good measurement strategy needs to account for this.

- User intent can shift what the activity type is, e.g.: watching a video for entertainment vs. watching a video for obtain information for a math problem.

- You can use this theory to better work with cross-functional partners and craft effective user measurement strategies.

Time-on-task’s history

The measurement of time-on-task was present in earliest explorations of usability’s definition, along with the idea that time-on-task should be ideally shorter. According to Sauro and Lewis, usability itself was explored privately by IBM in the late 1980s and more publicly in Europe through the MUSiC project. In both cases, the idea of minimizing time-spent as an increased measure of efficiency was tacit. This made sense where both IBM and MUSiC organizations were focused on workplace/productivity applications.

The goal of lowering time-on-task continued into more modern usability measurement work, centering on the ISO 9241-11:2018 usability definition. Sauro and Kindlund, in their Single Usability Metric (SUM) paper, mention in just a footnote that time-on-task should aim to be lower, with no further explanation. This was empirically corroborated in their results that showed a negative correlation: higher completion rates and satisfaction correlated to lower time-on-task. Sauro and Lewis’s additional explorations into correlations of usability metrics showed the same thing again: as completion and satisfaction increased, time-on-task decreased. The paper mentioned that the products tested were “printers, account and human resources software, websites, and portals” — this indicated again the user goals were primarily focused on workplace tasks and productivity.

Moving beyond usability measurement

As a user experience researcher, my work interacts beyond the scope of usability measurement and into the world of other disciplines such as product management or data science. Many metrics for product success involve engagement, measured via time-spent in an app or product. Engagement as a focus contrasts the 1980’s typical computer experience of workplace productivity applications. Billions of people now use computers/phones primarily for leisure rather than work/productivity. This common engagement metric approach has led to the question being posed, “Does more time-on-task possibly represent more engagement and is therefore a good thing?”. The answer is unclear until we start to properly categorize user activities into their users’ goals or orientations.

Categorizing activities into completion-oriented and emotion-oriented

The confusion between whether task times should increase or decrease with other desirable user metrics comes from lumping all user activities simply as ‘tasks’. The distinction becomes clear when we categorize activities by user orientations.

Completion-oriented activity

Some user activities have a discrete and correct outcome: send money to a friend, take a note, or change a setting. These are completion-oriented activities. This activity type also represents a typical flow a where a UX researcher would measure usability. Users don’t care about the feeling of doing the activity primarily — they want what occurs when the activity is complete. Users do the activity to have it done.

We would expect user satisfaction to increase when these completion-oriented activities are done more quickly.

Emotion-oriented activity

Some user activities don’t have a clear and correct outcome, but have an emotional, hedonic quality: scroll through posts or watch a funny video. Users intrinsically value the act of engaging with these activities. These are emotion-oriented activities; they are often measured through metrics like time-spent or increased user actions. Users do the activity for the feeling it brings while presently doing the activity.

We would expect user satisfaction to increase with emotion-oriented activities as the user spends more time in the activity (to a degree, we’ll get to this).

Another way to contrast completion- and emotion-oriented activities is that they are valued extrinsically and intrinsically, respectively. A user undertakes a completion-oriented activity like transferring money to have the money in their new account, not for the feelings they get while clicking the buttons to make the transfer. A user undertakes an emotion-oriented activity like watching a music video to have the feeling of watching the music video, not to have watched the video.

This categorization uncovers the ways the we need to treat each activity differently in our measurement approaches to properly assess what users want.

Implications and applications

Measurement approaches

Completion- and emotion-oriented activities are measured in different ways.

Completion-oriented activities are the prototypical usability testing task. Time-spent on completion-oriented activities is typically measured through larger scale usability benchmarking. Users are given an explicit goal and researchers manually calculate time spent to complete the goal or use a tool (UserZoom, MUIQ). One benefit here is that we know exactly what the user intends to do, but the downside is that this testing is time-consuming and resource intensive (recruitment, manual analysis, etc).

Time-spent on emotion-oriented activities is more often measured where the activity naturally occurs. This is typically done by in-app analytics systems, whether homegrown within an organization (querying log events in a SQL table) or a 3rd party tool (Pendo, Hotjar, etc). One benefit here is that the data are extremely naturalistic and automatic to capture once initially set up. The major downside is that the data are noisy: we don’t know each user’s intent. Another key consideration is how to assess when there may be too much time spent on an activity in a curve of diminishing returns.

Emotion-oriented diminishing returns

Emotion-oriented activities bring users more satisfaction by the act of the activity itself. However, there is always a limit to how much of the activity will continue to bring a user satisfaction. Any emotion-oriented activity has a curve of diminishing returns that leads to an eventual satisfaction decrease.

Let’s imagine an emotion-oriented activity most of us are familiar with, scrolling on Facebook or Instagram or Reddit or Twitter (X) to pass some time. Initially, you may be looking to escape boredom or see some interesting images and have the intrinsic enjoyment you get when you scroll through the feed. Eventually, you may start to get bored of this particular activity if you felt like you had no other choice but to keep scrolling (say you’re waiting for your name to be called in a waiting room). Or, if you scroll your favorite app too often everyday, you may enjoy it for a for moments and then realize you’ve already spent too much time today scrolling and worry that you’re overusing the app.

It’s important to build this curve into a measurement strategy for a product. This could be done by looking at long term retention related to time-spent on an app’s key emotion-oriented activity, or by surveying for user satisfaction via a survey based on varying durations of user activity. These methods can help a team find out what the sweet spot is for user engagement.

Measuring time-spent in emotion-oriented activities comes with an added challenge due to its naturalistic nature: users rarely fit neatly into a single activity type when using an app in the real world.

Activity switching

These activity orientations often exist sequentially and side-by-side. Users can switch between orientations rapidly. Let’s break down the example of watching a TV show on Netflix:

- Find an episode to watch and play it (completion-oriented)

- Watch the video (emotion-oriented)

- Adjust the volume (completion-oriented)

- Watch the video, continued (emotion-oriented)

- Add the show to “My shows” list to watch later (completion-oriented)

The user in a fairly typical example switched between completion- and emotion-oriented activities multiple times. When measuring engagement of emotion-oriented activities, we need to attempt to control for how much of the time is interrupted or shared with completion-oriented activities.

How many times does a user adjust the volume in a Netflix video? Does the action occur many times in quick succession, indicating a failure in the first times to choose the intended volume? Does the intermittent volume changing occur in the same moment for the episode across users, indicating poor audio mixing within the episode? All of these things could add more time that the video is being watching (good engagement), while actually detracting from the user experience (bad usability). We need to be sure our emotion-oriented activity isn’t overly saturated with completion-oriented activities underneath.

In addition to switching between orientations across activities, each activity could have a different orientation depending on the intent of each user.

User intent defines the activity type

In each real world application, the activity’s orientation depends on the context of the user. For example, watching a YouTube video is typically an emotion-oriented activity that’s done for a user’s goal to enjoyably pass time. However, it’s possible that the user is a student and needs to obtain the information in the video for a math problem solution. In this instance, the video experience would typically show a higher satisfaction with a smaller and smaller duration, as long as the user completes their goal of obtaining that information they need for the problem.

In traditional completion-oriented activities, this isn’t often a problem due to the way we measure it in a research study. We give the users what the activity’s intent in the instructions is when we set up the usability study. In emotion-oriented activities, it’s not as straightforward. Clarifying user intent could be managed by intercept surveys to users or by categorizing content types on the product side (e.g.: knowing which video is a math problem video vs. a comedy video).

Applying this information

Working with (B2B) product teams

Given our new understanding of our product goals around time-spent in user activities, you can use this framework in your discussions with product teams. As a UX researcher, this has come up especially frequently for me in business-oriented products. Many product professionals see more time as more engagement. In a B2B context, this is unlikely the be the case.

Say your team defines a new UI flow to creating a remote server configuration for your developer product. Sure, having more setup options than the previous bare-bones version is more satisfying: a developer can now have their server set up fully and correctly from the start. It would seem more time-on-task is better here. However, wouldn’t it be even better if those options were pre-selected based on context and didn’t even need to be filled out? In our thought experiment, that answer is “yes”! If we simply leaned on classic product definitions of more time means more good engagement, the team would stop optimizing the flow in a significant way. This would be a lost opportunity because we know that developers don’t intrinsically value managing their server settings, they use it to get their job done of having a functioning server.

I’ve brought this conversation up before with good results on an interdisciplinary team – these concepts are not fundamentally difficult to understand, they simply add another dimension to product thinking that may not translate from traditional consumer contexts to B2B contexts.

Working with other frameworks

This concept of completion- and emotion-oriented activities can be used in other frameworks as well. One example would be Jobs-to-be-Done. If you’re not familiar, it’s an innovation-focused set of methods that posits a person uses a product to meet their goal, not to use the product. The most common example: a person buys a drill because they want a 1/4″ hole, not to have a drill.

The idea of a “job” can be defined just like our activities above. A “job” might be completion-oriented (learn to fix my roof) or emotion-oriented (feel confident about home ownership). Applying our thinking to these types of jobs will lead you to correctly define your job statements that require verbs and objects to show your objective (“minimize time to learn how to attach shingles” vs “maximize breadth of knowledge about home repairs”). The former focuses on efficiency due to the job type and the latter focuses on the intrinsic value of gaining knowledge.

JTBD is just one framework where this may apply – if you can think of others, please leave a comment on the LinkedIn post for discussion.

Next steps

I’ve laid out what I think is a well-reasoned set of hypotheses:

- In completion-oriented activities, satisfaction negatively correlates with time-spent in a user activity.

- In emotion-oriented activities, satisfaction positively correlates with time-spent in user activity, until it doesn’t (diminishing returns to decrease).

These are still just hypotheses. I’ve seen some anecdotal success with this framework, but I haven’t empirically tested these hypotheses at scale. I plan to do this in the future to validate the way we should classify user activities related to usability and engagement.

Conclusion

So, can longer task times be a good thing? It depends on how we define “task” – to avoid conflating concepts, we need to speak more generally about a user’s “time-spent”. The proposed model shows how the ideal time-spent can vary depending on whether a user activity is completion-oriented (focused on a specific goal) or emotion-oriented (done for the inherent enjoyment).

Consider using the model of completion- or emotion-oriented user activities in your work as a UX researcher. This can be particularly helpful when defining metric goals with cross-functional partners, specifically on whether to optimize for speed (less time-spent) or engagement (more time-spent).

Do you have thoughts on this theory of organizing time-spent measurement by user intent? Weigh in on LinkedIn.

Thanks to Lawton Pybus and Thomas Stokes for their help shaping an early draft of this post.

One response to “Can longer task times for users be a good thing?”

[…] Can longer task times for users be a good thing? (12min) We often use Task Completion Time as a metric: the lower the time, the better the experience, right? Carl J. Pearson introduces the idea that this depends on how the activity is categorized. Lowering the time for completion oriented activities done for extrinsic goals (sending money to a friend) increases satisfaction. […]