Introduction

Why does UX research face the prospect of democratization, while other product functions do not? This question is just the type of problem we as researchers should tackle. By knowing the answer, it unlocks some strategy for how to contend with the threat or opportunity of research democratization.

Research democratization

Unless you’ve been quietly sequestered away working in your own corner of the UX world (lucky you!), you’ve heard of research democratization. As far as I can see, research democratization was first used by Jen Cardello in her 2019 medium article to mean “empowering non-researcher product team members to conduct some types of research themselves”1.

The discourse for the past 5 years has claimed that democratization is good, bad, or a mix of both2. Occasionally, the question is rhetorically asked, why aren’t other functions being asked to democratize? (see Who “Owns” Research?). This is what I am most curious about, and not just for the sake of rhetoric. What is it about the nature of UX research that makes organizations consider democratization?

My purpose is not to say we should or should not democratize, but that we need to understand the pitfalls. As Erika Hall points out, a major negative consequence of research democratization is that you get “more bad research done by people who don’t know how to do good research.” This is the main argument many research teams cite to stop democratization.

For all the good that democratizing research promotes, it may present an unintended problem – more bad research done by people who don’t know how to do good research.

Erika Hall

Jared Spool used an analogy that sums up a major argument against the anti-democratization camp and what some consider to be gatekeeping, “People don’t stop going to restaurants when they learn how to cook. They appreciate fine cooking even more”. This argument is flawed in a critical way: people know when their cooking outcomes are good or bad. If you make pasta and boil the noodles to mush, you’ll know instantly the texture is wrong.

I doubt many people would still go to a fine dining steakhouse if they couldn’t tell that their burnt chunk of meat wasn’t a perfectly seared filet mignon. This is why the cooking analogy fails and is fundamentally different from UX research. People without some research training cannot tell from output artifacts if the underlying research was rigorous or not. This is why UX research has to contend with democratization and other domains do not.

Note: Erika Hall used a similar analogy to say that bad surveys do not stink, but this post argues that the metaphor extends to all UX research methods and output artifacts.

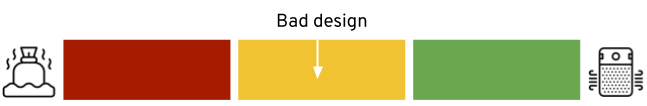

The smell-o-meter for bad output

I’m adopting a highly scientific ‘smell-o-meter’ to analogize the situation. When the output of a product process doesn’t work and it’s easily visible, this means bad output stinks. This would be like a raw fish filet going bad – it’s obvious immediately to your nose that you shouldn’t cook with or eat the fish.

On the far end, when the output of a product process doesn’t work and it’s hard to assess that poor quality, this means the bad output smells totally fresh. This would be like a toxin in improperly canned goods – there’s no way to smell or taste that the canned food is quite dangerous to cook with and eat.

Going beyond the food metaphor, where do UX research and other product processes fall on the scale? Let’s start with the smelly end of our smell-o-meter.

Why do only engineers engineer software?

Engineering is on the smelly end of the smell-o-meter.

No one engineers software but engineers. PMs aren’t shipping code they’ve written and UX researchers aren’t making pull requests. Why don’t others attempt to engineer code for the feature they want in a product? They know they can’t do it. And it’s obvious that they can’t, if they try to do it without proper skills.

If an unskilled-at-coding UX researcher attempts to write code, it quite simply will fail. Immediately. “Hello world” won’t appear, the button will click uselessly with no action, or the screen will just remain black.

Bad engineering stinks. Anyone can tell when something is poorly engineered because it fails or breaks in an obvious manner.

What about design?

Design starts to go towards the middle of our smell-o-meter.

You don’t need to be a designer to know when some design is good or bad. You’ve almost certainly heard a person that doesn’t work on products exclaim, “this app is so hard to use” or “I think this website is ugly”.

I’m not saying everyone is a designer – you need to be a designer to create good designs. But there is a level of intuition that applies when assessing design. Everyone is a consumer of design because they experience it across the hundreds of systems and applications they use.

Why don’t PMs and engineers just design what they need? Partially it’s because people have the intuitive assessment of design. This is what allows a PM to see their design work and assess that it’s not the best (especially when held up against a design colleague’s version). The padding feels off from the other apps they use (even if they don’t know the design jargon) or they know the menu that takes four levels to click through feels too long.

So, bad design stinks. Bad design doesn’t smell as much as bad engineering – there’s more subjectivity involved in design than engineering. But nevertheless, design remains mostly done by designers because it’s easier to see when design isn’t working.

(Note: there is more nuance here. Designers are better at assessing design technically than others, but the broader argument still stands.)

Why does everyone feel like they can do UX research?

UX research is on the far end of our smell-o-meter, where bad UX research output still smells clean and fresh. This causes inexperienced people to not know when their research work has gone poorly.

A research project’s output can look nearly identical when a study is designed well or poorly. Unlike engineering, when a screen simply goes black if the code is broken, bad UX research can still have a shiny, coherent research artifact at the end. It just won’t have valid insights (as in, not truly answering the research question).

Example:

Say you have a new app, and, as a person with no research training, you want to know if people will use it. With little UX research training, you’ll simply create a survey, send it to an online panel, and ask “From 1-5, how likely are you to use this product?”.

With 500 responses and 90% of respondents report being very or extremely likely to use your product, you conclude that it’s really likely your product will succeed with some product market fit. The deck looks great and the evidence seems overwhelming.

What’s missing in this example? To name just two key problems, this approach ignores the fact that (a) asking people to predict their future behavior is a poor indicator of actual future use and (b) data from online panels show a strong acquiescence (overly positive) bias in survey responses. The findings are not obtained in a way that will provide valid evidence for research question.

These misgivings about the research aren’t known or shown in the results deck. The key insight built on shaky ground looks great on a slide deck and fits into a product strategy just as neatly as another insight that was done rigorously. So, the team launches anyway. If the product truly has little product market fit, the example research design would not provide any evidence to this, and the launch would fail to gain traction with users.

The key insight built on shaky ground looks great on a slide deck and fits into a product strategy just as neatly as another insight that was done rigorously.

Anyone can learn to do what we do in UX research. It’s not intrinsically harder than other fields. However, you DO need to learn it to avoid major mistakes. Without training on the pitfalls of UX research, you are almost sure to make them.

Bad UX research does not stink. Anyone without training can create poor research and the output looks just as convincing to the untrained eye. This is the reason why UX research uniquely feels the push for democratization.

The way forward is less clear to me, and I suppose to the field as a whole, as evinced by the many articles from all points of view on research democratization. Still, this framework for understanding the nature of UX research indicates an area of focus that may reduce the chances of landing in a democratization pitfall: impact tracking.

(Note: there is a debate as to why UX researchers want to let others do things like usability testing but not generative interviews. Spool says disdainfully about those with this approach, “We will carve out small pieces and let you do your thing”. There is a good reason UX researchers do this, mainly that some kinds of research outputs smell fresher when they’re bad than others.3)

Everything stinks eventually and what to do

Okay, so smell-o-meter applies best in the short term. Over time, everyone at an organization could find out what worked or didn’t by how the company performs. With our research example, even though the “data” pointed to 90% of people wanting to use the product, that number will be put to the test quickly when the product launches. But that’s much too late. Research exists to reduce risk preemptively. If a team programmatically assesses research quality only by how the product performs, research has little value. Impact tracking can’t prevent a bad study that is already complete, but it can start a data-driven approach to measuring when democratization is or isn’t working.

Focus on impact

If we believe the premise that high quality research is more effective at helping organizations, then a clearer process for tracking impact would show leadership why (poorly executed) democratization is bad for the organization. Our example PMF survey study would not be saved by its shiny deck. Bad research cannot hide behind polished research artifacts when there is a way to see how the output influences the organization for the worse.

So, we arrive at our call to action as researchers: we need to make impact tracking so straightforward and formulaic that those without adept research skills are expected to demonstrate their value through it, beyond just a research artifact.

This article isn’t big enough to show how to track impact more effectively and codify it into research operations. In fact, even with the many talented researchers that have written about the topic (see here, here, here, here, etc.), tracking impact remains challenging for the best researchers in my experience. However, I believe it is possible if we continue to share our wins and resources with the research community at large. Repeatable, effective impact tracking is the best way researchers can show how research expertise leads to better business outcomes.

Conclusion

The topic of research democratization has been hotly debated since it emerged4. The pressure to (not) democratize UX research is especially strong because of the unique nature of UX research outputs. Unlike engineering, design, etc, the output quality of research isn’t clearly discernible by those without training in the field. To avoid producing more research that is bad through a democratized approach, organizations need to closely track research impact of those they employ to do research. This will allows teams to ensure they see the true positive or negative outcomes from conducting democratized research.

Ultimately, I’m not here to argue for or against democratization (even though I have my opinions). I wanted to illustrate why UX research faces the pressure to democratize, in hopes that this knowledge will better avoid the pitfalls it brings. This could be not democratizing all together, or democratizing with the pitfalls in mind. It all depends on the team and its resources available.

Remember the quote from Erika Hall for the major pitfall of democratization – we don’t want more bad research. The challenge is that it’s hard to show what is good and bad in a typical product context because bad research output doesn’t stink. Through a more robust impact tracking process, product teams can assess when democratization is or isn’t working.

Appendix

Footnotes

- The concept of research democratization: This builds on a tradition of the same concept by other terms, like Kate Towsey’s people who do research or the countless other articles that don’t have a catchy name for the concept. ↩︎

- More democratization discussion links: The discussion of democratization exploded in the UX research community, with some pushing forward to help teams do so (eg: Democratize user research in 5 steps) and others ringing alarm bells as loudly as they can (eg: Democratization won’t save research). Eventually, the conversation dug into the nuance of this controversial topic (eg: Democracy or Tyranny in UX Research… Is there a Middle Ground?). ↩︎

- Some research is smellier than other kinds of research: Spool says in the article “doctors don’t mind people putting band-aids on themselves” to describe why researchers should let non-researchers do research. However, this analogy doesn’t hold up under scrutiny. (1) The product equivalent for this is not a PM doing research, but a user doing an in-app workaround to fix their issue. The analogy for a PM doing research is another professional doing work of another professional. A better analogy is the scope of what a nurse can do. A nurse cannot make a medical decision to give whatever medication they want. They can only give medications/procedures based on formalized protocols that make a nebulous situation into a clear decision tree, approved by a doctor. This would be similar to having clear protocols for non-UXRs to do usability testing. (2) Summative research has a much better chance of being done rigorously by non-researchers than something like jobs-to-be-done interviews or mental models. Usability testing is more formulaic, and therefore, more easily delegated. Further, it can be more easily seen if the outputs are valid. Usability testing research can be sneaky (presenting small numbers as large numbers, cherry-picking, etc), but the domain is much closer to design. This allows for more intuitive checks of the research output recommendations against the designs being tested. On the other hand, generative research methods allow product people to ask poor interview questions (“would you use this product?”) and get outputs that appear valid on the surface with little to check assumptions against.

↩︎ - Democratization as a trendy topic: The topic of research democratization has somewhat faded, especially in the harsh realities of the current market downturn and layoffs, often being called a research reckoning (see Erika Hall’s post). Does that mean democratization doesn’t matter anymore? No. The question of democratization comes from the fundamental nature of research, specifically how its output isn’t clearly indicative of the study quality or rigor. This means the topic is likely to come back again and again. ↩︎

References

Appendix links excluded

Tell us what you really think: Jared Spool on democratizing research – Sean Bruce

How to Track the Impact of Your UX Research – LIzzy Burnam

How our product design framework guides UX research – Jen Cardello

Find Your Hidden UX Research Impact – Eli Goldberg

Who “Owns” Research? – Debbie Levitt

LinkedIn post: “I measure UX Research impact at 4 levels.” – Ruby Pryor

Tracking the impact of UX Research: a framework – Caitlin Sullivan

Webinar recap: People who do research – featuring Erika Hall – Jack Wolstenholm

Image credits

Photo of Woman Wearing White Top Holding Purple Flowers – Aidi Tanndy

Air Purifier free icon – IconBaandar

Thanks

Thanks to Lawton Pybus and Thomas Stokes for their help reviewing this post.