This is part one that explains when and why to do a cognitive survey test. Jump to part two if you just want to learn how to conduct one.

You’re a UX researcher creating a survey. You’ve done qualitative research so you know the areas you want to measure quantitatively. You’ve aligned your stakeholders so everyone is informed of the potential outcomes and impact. Do you program it and launch it now?

Depending on the survey’s goals and complexity, you should consider doing cognitive testing on your survey. If you don’t, you may end up asking users questions they don’t know how to respond to.

A now classic meme about the Net Promoter Score being used in an atypical context.

What is cognitive testing?

Cognitive testing is a method to discover how ‘answerable’ your survey questions are. Researchers use this before a survey is fully launched to ensure the survey captures the intended data from how each person responds.

The protocol is similar to a structured interview or usability test that uses a think aloud protocol, sampling a small group of participants who are representative of the target audience. The interviewer asks the participants to think aloud as they complete the survey. The interviewer will then ask the participants questions about their thought process, such as why they chose a particular answer or what they found confusing about the question wording. A researcher can also use an unmoderated tool to have participants to think aloud as they complete the survey asynchronously.

At the end of this, the researcher can tell which questions work well and which need adjusting to ensure respondents can accurately and reliably provide high quality responses.

When should you consider cognitive testing?

Like any research method, there are certain reasons to use this method (and not to use this method). If you have access to rapid, unmoderated testing tools, there is almost no reason to not cognitively test your survey. It can be done in a single day with significant benefits to the survey results.

Without unmoderated tooling, the resource calculation is more nuanced. The two main reasons to test with a longer, moderated approach are (1) if you have an exceptionally complex survey or (2) if you need exceptionally high quality data the first, or only, time you run the survey. In a perfect world, we would always cognitively test our surveys. Since we live in an imperfect world bound by resource and time constraints, read on to learn when you should consider using the cognitive testing method on your survey.

Rapid unmoderated cognitive survey tests

Unmoderated testing services, such as UserTesting, have proliferated in recent years. These allow researchers to conduct tests asynchronously and with quick access to panel participants. With an unmoderated testing service, there is almost no excuse to avoid testing your survey on a general consumer pool (though it is harder with B2B participants).

It’s possible to launch a survey first thing in the day, collect data throughout the day, and spend the last hour of the day analyzing the results. Because of this accessibility, researchers should strive to always cognitively test surveys (with proper tooling and participant access).

If you don’t have access to this tooling, or your participants make recruitment for such a test challenging, there are other things to consider when the extra effort is worthwhile.

Complex survey questions and design

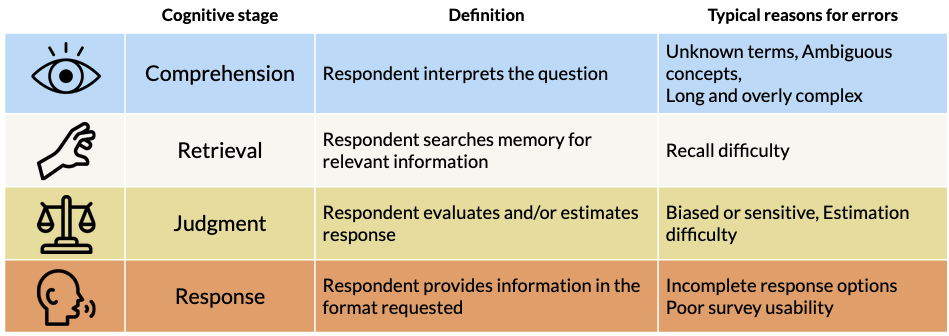

Certain types of questions are more or less easily answered by respondents, based on what cognitive process is engaged at the time of the error. It’s helpful to know how a person theoretically responds to a survey question so you can heuristically assess where and why a respondent may get stuck with your question.

You should consider cognitive testing when:

- Comprehension stage: your questions are abstract or complex.

- Are you measuring something abstract like trust in an AI tool or consumer tech-savviness?

- Does your question use the technical jargon of your product or users’ context?

- Retrieval stage: you’re not sure if participants can easily recall what you’re asking.

- Are you asking about a small moment that happened within the past two weeks?

- Are you asking about the frequency of a user’s behavior three months ago or further back?

- Judgement stage: your question is sensitive or likely to come under a clear bias.

- Are you asking about a respondent’s medical history or political beliefs?

- Does your survey anchor or prime participants based on previous parts of the survey?

- Response stage: your survey instrument is complex or incomplete.

- Are you unsure if your response options cover all of the possible responses?

- Does your survey use an unfamiliar interface pattern (e.g.: 0-100 scale, rotational dial button)?

- Are you using extreme or unfamiliar adjectives in your Likert response items?

If your questions or survey meet any of the criteria above, it may be worth considering cognitive testing to improve your data quality. (Note: the bullet points are just examples, not exhaustive lists.)

One benefit of using existing surveys is that these issues have normally been worked out already (if it was robustly developed). Concepts like usability or feature satisfaction can be nuanced – consider that most users don’t have a vocabulary built around these concepts like people who work on products all day long. It’s a good idea to opt for an existing scale, if it fits your purposes. If nothing does, you may want to cognitively test!

Tracking surveys or high impact projects

Even if your survey appears straightforward based on your heuristic evaluation, there may still be a case to do cognitive testing if the room for error needs to be really low.

Tracking surveys (poor quality propagates)

As quantitative UX research grows, more UX teams are in charge of bespoke tracking surveys for key UX metrics. These tracking surveys often run over quarters and years to assess how a product team is hitting its UX objectives. A survey with poor quality data will make your hard work quite useless over time (and tracking surveys can be a lot of work). Further, the point of a tracking survey is to measure over time. Changing the survey questions at any point breaks off the ability to compare to a point in time before that wording change. Once again, using an existing survey for common constructs can help you get around this.

High impact (poor quality has a big risk)

Besides surveys with a long lifespan, you may really need your survey to be correct. Your product team may be hinging an entirely new product direction on the results. Or, your company may decide to go into brand new global markets based on the results. Cognitive testing can help you be extra sure your results are sound.

Inside any company, entire teams grow accustomed to the specific terminologies of the product design and key words used in company strategies. When researchers apply these directly to surveys, users don’t know the critical concepts of a product or strategy well enough to give an accurate response. While researchers try to remain objective, this isn’t always possible. Cognitive testing helps check these assumptions for your strategic surveys.

Other tips

- You may learn from open response data that your questions don’t make sense to participants. If you ask an open question as a follow up to your measurement question and responses are topically about something slightly or totally different, the question may need work. Consider this a decision point to pause/test/revise the survey or note change is needed for future iterations.

- You can run cognitive tests as standalone projects, but you can also add them onto another project. If you only have a couple questions in your survey, they may fit neatly into the end other interviews or usability tests that your team is already running.

- Global/multi-language surveys ideally benefit from cognitive testing in each survey language. Every time the question is re-written, new potential for awkward or unclear wording is introduced. This multiplies the resources required for surveys, which isn’t always feasible. Consider having a native speaker in your company, that isn’t the translator, evaluate the translations to do at least a cursory check for quality.

Conclusion

Cognitive testing is a tool you should have in your toolkit if you run surveys as part of your job to smooth out confusing or unanswerable questions. With the right tools, you should almost always conduct cognitive testing on non B2B participant groups. Even when it takes more effort or time, complex surveys or surveys for high-risk product decisions have a particular benefit from cognitive testing.

If you are interested in doing this type of testing, see part two for a primer on how to conduct a cognitive survey test.

Sources